We’re living in the era of infinite data – in which the stream pouring forth from computational models and simulations can be as voluminous as outputting every value at every time-step, drowning disks and researchers alike with high-cadence, arbitrarily large data-sets. Rather than struggling to make models bigger, the challenge is now to keep them under control.

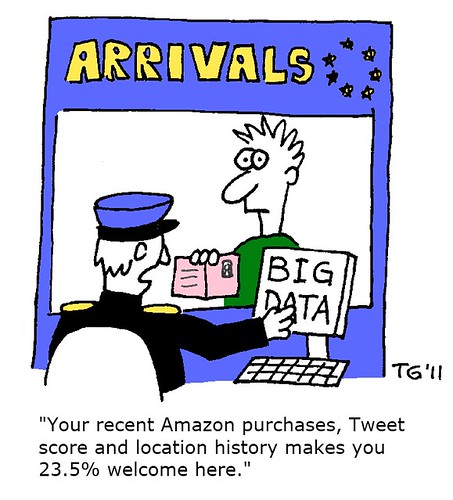

More than simply bits and bytes, big data is now a billions of dollar business proposition. From retailers to manufacturers to data hungry politicians, are fast discovering the power of turning consumers’ GPS codes and buying community histories into bottom-line-enhancing insights via ML / AI. But while harnessing the power of data analytics is clearly a competitive advantage, overzealous data mining can easily backfire. As organisations become experts at slicing and dicing data to reveal details as personal as mortgage defaults and heart attack risks, the threat of egregious privacy violations grows.

The challenge of synthesizing information scales with this complexity, so simulators must develop more complex tools and techniques to interrogate the data. The tools that simulators require must deal with both complexity and quantity, and for this month’s topic on processing, visualizing and understanding scientific data – both for construction & destruction.

Web & mobile surfers have been giving up their personal data for years, often without any thought of how it might be used. That’s now all changed, thanks to the huge stink over the unauthorized harvesting of users’ information by many profiteering agencies – private & government. Question now becomes what safeguards are needed to put a halt to future abuses and just who will enforce those safeguards.

In the future, everyday objects will come outfitted with smart cameras and the latest in visual or voice-recognition technology. The effect will be to provide vast new streams of consumer data. Marketers will be far better able to track consumer sentiment leading up to the decision to purchase a particular product, and do it in real time. This has huge implications. If we had sizable data streams before—well, they’re about to be increased exponentially, giving marketers much deeper insights in consumer behavior.

Living in a virtual world, it’s at times easy to forget that the data we aggregate is actually private property. But the industry is increasingly coming to think of data more like consumer’s private property, less simply as a tool to gain advantage over competitors. As a result, even more guardrails will be placed around how that data is used.

We hear so much talk of permissions – the idea that users agree to give up their data in exchange for access to free content. Now we have all this controversy over how that data is being used. Is part of the problem that users just aren’t paying attention to the small print? It’s critical that data owners – consumers – are aware of what we’re doing with their data, and privacy disclosure agreements are at the heart of it all. They need to take responsibility at some level for knowing what data is out there about themselves and how that data is being used. It’s their data. It belongs to them.