Apache Spark is a fast and general-purpose cluster computing system. It provides high-level APIs in Java, Scala and Python, and also an optimized engine which supports overall execution charts. It also supports a rich set of higher-level tools including Spark SQL for SQL and structured information processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming. Spark can be configured with multiple cluster managers. Along with that it can be configured in local mode and standalone mode. Standalone mode is good to go for a developing applications in spark. Spark processes runs in JVM. Java should be pre-installed on the machines on which we have to run Spark job.

nano /etc/apt/sources.list

deb http://ppa.launchpad.net/linuxuprising/java/ubuntu bionic main

apt install dirmngr

apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys EA8CACC073C3DB2A

apt update

apt install oracle-java11-installer

apt install oracle-java11-set-default

wget http://mirrors.estointernet.in/apache/spark/spark-2.4.3/spark-2.4.3-bin-hadoop2.7.tgz

tar xvzf spark-2.4.3-bin-hadoop2.7.tgz

ln -s spark-2.4.3-bin-hadoop2.7 spark

nano ~/.bashrc

SPARK_HOME=/root/spark

export PATH=$SPARK_HOME/bin:$PATH

source ~/.bashrc

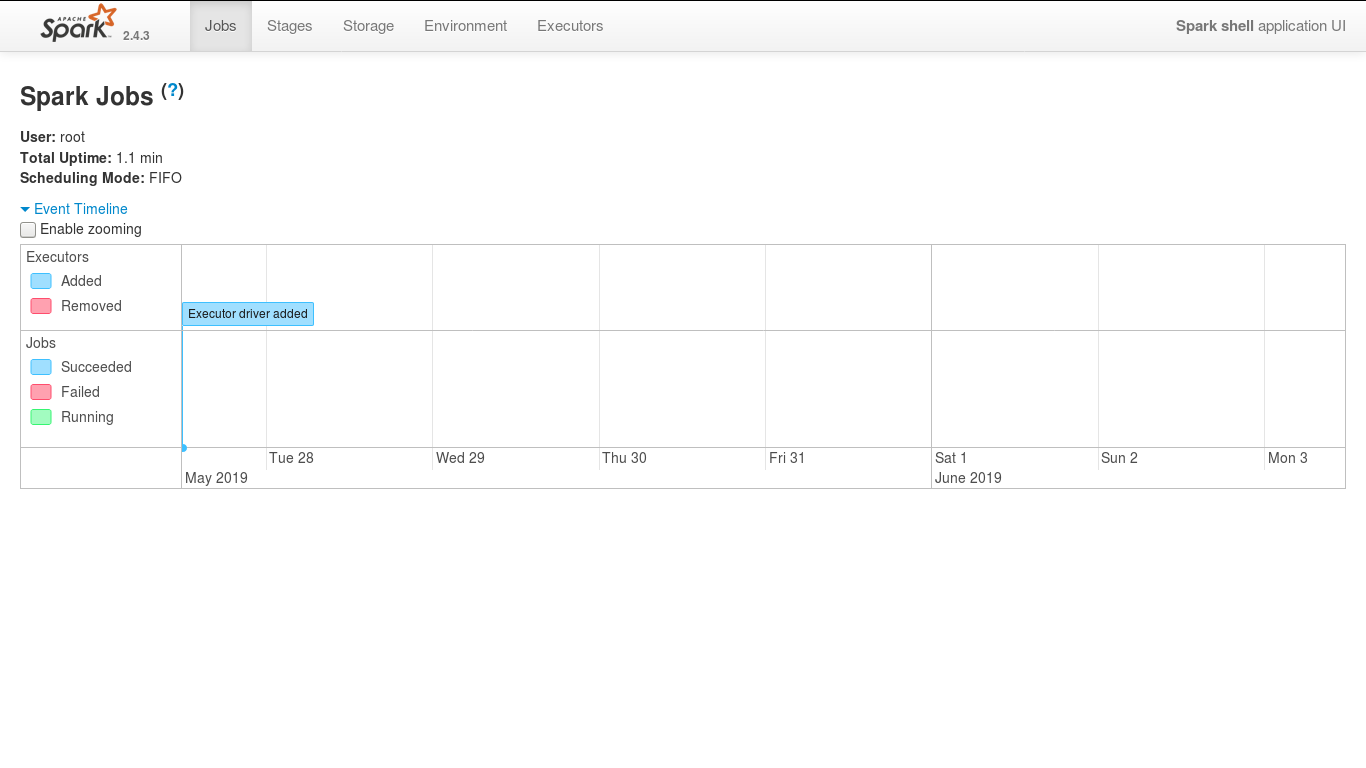

./spark/bin/spark-shell

Next we will write a basic Scala application to load a file and then see its content on the console. But before we start writing any java application let’s get familiar with few terms of spark application

Application jar

User program and its dependencies are bundled into the application jar so that all of its dependencies are available to the application.

Driver program

It acts as the entry point of the application. This is the process which starts complete execution.

Cluster Manager

This is an external service which manages resources needed for the job execution.

It can be standalone spark manager, Apache Mesos, YARN, etc.

Deploy Mode

Cluster – Here driver runs inside the cluster

Client – Here driver is not part of the cluster. It is only responsible for job submission.

Worker Node

This is the node that runs the application program on the machine which contains the data.

Executor

Process launched on the worker node that runs tasks

It uses worker node’s resources and memory

Task

Fundamental unit of the Job that is run by the executor

Job

It is combination of multiple tasks

Stage

Each job is divided into smaller set of tasks called stages. Each stage is sequential and depend on each other.

Learn Hadoop by working on interesting Big Data and Hadoop Projects

SparkContext

It gets the application program access to the distributed cluster.

This acts as a handle to the resources of cluster.

We can pass custom configuration using the sparkcontext object.

It can be used to create RDD, accumulators and broadcast variable

RDD(Resilient Distributed Dataset)

RDD is the core of the spark’s API

It distributes the source data into multiple chunks over which we can perform operation simultaneously

Various transformation and actions can be applied over the RDD

RDD is created through SparkContext

Accumulator

This is used to carry shared variable across all partitions.

They can be used to implement counters (as in MapReduce) or sums

Accumulator’s value can only be read by the driver program

It is set by the spark context

Broadcast Variable

Again a way of sharing variables across the partitions

It is a read only variable

Allows the programmer to distribute a read-only variable cached on each machine rather than shipping a copy of it with tasks thus avoiding wastage of disk space.

Any common data that is needed by each stage is distributed across all the nodes

Spark provides different programming APIs to manipulate data like Java, R, Scala and Python. We have interactive shell for three programming languages i.e. R, Scala and Python among the four languages. Unfortunately Java doesn’t provide interactive shell as of now.

ETL Operation in Apache Spark

In this section we would learn a basic ETL (Extract, Load and Transform) operation in the interactive shell.

4 thoughts on “How To Install Apache Spark on Debian”

Comments are closed.

Hadoop (docker-search) on Ubuntu:

nano /etc/apt/sources.list

deb http://ppa.launchpad.net/linuxuprising/java/ubuntu bionic main

apt install dirmngr

apt-key adv –keyserver hkp://keyserver.ubuntu.com:80 –recv-keys EA8CACC073C3DB2A

apt update

apt install oracle-java11-installer

apt install oracle-java11-set-default

ssh-keygen -t rsa -P ” -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

ssh localhost

wget http://mirrors.estointernet.in/apache/hadoop/common/hadoop-3.2.0/hadoop-3.2.0.tar.gz

tar xzf hadoop-3.2.0.tar.gz

mv hadoop-3.2.0 hadoop

nano ~/.bashrc

export HADOOP_HOME=/root/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

nano /root/hadoop/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-amd64

hdfs namenode -format

cd /root/hadoop/sbin/

./start-dfs.sh

cd /root/hadoop/etc/hadoop

fs.default.name

hdfs://localhost:9000

nano core-site.xml

nano hdfs-site.xml

dfs.replication

1

dfs.name.dir

file:///root/hadoop/hadoopdata/hdfs/namenode

dfs.data.dir

file:///root/hadoop/hadoopdata/hdfs/datanode

nano mapred-site.xml

mapreduce.framework.name

yarn

nano yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

hdfs namenode -format

cd $HADOOP_HOME/sbin/

./start-dfs.sh

./start-yarn.sh

nano ~/.bashrc

wget http://mirrors.estointernet.in/apache/spark/spark-2.4.3/spark-2.4.3-bin-hadoop2.7.tgz

tar xvzf spark-2.4.3-bin-hadoop2.7.tgz

ln -s spark-2.4.3-bin-hadoop2.7 spark

apt-get install hadoop-2.7 hadoop-0.20-namenode hadoop-0.20-datanode hadoop-0.20-jobtracker hadoop-0.20-tasktracker

nano ~/.bashrc

SPARK_HOME=/root/spark

export PATH=$SPARK_HOME/bin:$PATH

source ~/.bashrc

./spark/bin/spark-shell

apt-get install apt-transport-https ca-certificates curl gnupg2 software-properties-common

curl -fsSL https://download.docker.com/linux/debian/gpg | apt-key add –

add-apt-repository “deb [arch=amd64] https://download.docker.com/linux/debian stretch stable”

apt-get update

apt-get install docker-ce

systemctl status docker

docker search hadoop

docker pull hadoop

docker images

docker run -i -t hadoop /bin/bash

docker ps

docker ps -a

docker start

docker stop

docker attach

Running your first crawl job in minutes

wget https://raw.githubusercontent.com/USCDataScience/sparkler/master/bin/dockler.sh

Starts docker container and forwards ports to host

bash dockler.sh

Inject seed urls

/data/sparkler/bin/sparkler.sh inject -id 1 -su ‘http://www.bbc.com/news’

Start the crawl job

/data/sparkler/bin/sparkler.sh crawl -id 1 -tn 100 -i 2 # id=1, top 100 URLs, do -i=2 iterations

Running Sparkler with seed urls file:

nano sparkler/bin/seed-urls.txt

copy paste your urls

Inject seed urls using the following command,

/sparkler/bin/sparkler.sh inject -id 1 -sf seed-urls.txt

Start the crawl job.

To crawl until the end of all new URLS, use -i -1, Example: /data/sparkler/bin/sparkler.sh crawl -id 1 -i -1

Access the dashboard http://localhost:8983/banana/ (forwarded from docker image).

Apache Solr is an open-source search platform written in Java. Solr provides full-text search, spell suggestions, custom document ordering and ranking, Snippet generation and highlighting. We will help you to install Apache Solr on Debian using Solution Point VPS Cloud.

apt install default-java

java -version

wget http://www-eu.apache.org/dist/lucene/solr/8.2.0/solr-8.2.0.tgz

tar xzf solr-8.2.0.tgz solr-8.2.0/bin/install_solr_service.sh –strip-components=2

bash ./install_solr_service.sh solr-8.2.0.tgz

systemctl stop solr

systemctl start solr

systemctl status solr

su – solr -c “/opt/solr/bin/solr create -c spcloud1 -n data_driven_schema_configs”

http://demo.osspl.com:8983

Your own Rocketchat Server on Ubuntu:

snap install rocketchat-server

snap refresh rocketchat-server

service snap.rocketchat-server.rocketchat-server status

sudo service snap.rocketchat-server.rocketchat-mongo status

sudo service snap.rocketchat-server.rocketchat-caddy status

sudo journalctl -f -u snap.rocketchat-server.rocketchat-server

sudo journalctl -f -u snap.rocketchat-server.rocketchat-mongo

sudo journalctl -f -u snap.rocketchat-server.rocketchat-caddy

How do I backup my snap data?

sudo service snap.rocketchat-server.rocketchat-server stop

sudo service snap.rocketchat-server.rocketchat-mongo status | grep Active

Active: active (running) (…)

sudo snap run rocketchat-server.backupdb

sudo service snap.rocketchat-server.rocketchat-server start

sudo service snap.rocketchat-server.rocketchat-server restart

sudo service snap.rocketchat-server.rocketchat-mongo restart

sudo service snap.rocketchat-server.rocketchat-caddy restart

Get your own Cloud Mail Server, Linux Dedicated / Managed Server and launch apps quickly. Book at https://shop.osspl.com